As our world gets more connected, new IoT applications are emerging in virtually every industry ranging from Automobiles and Healthcare to Energy. IoT is everywhere.

Research shows the number of connected devices hit ~40 billion at the end of 202, and this number is only going to increase due to increased online connectivity. With increasing Energy consumption, solar power has become a major alternative for household and even commercial needs. As with all systems, solar panel systems, too, are prone to faults, which need to be tracked in real-time and fixed before total system failure.

This is where the concept of Push Datasets comes into play. A Push Dataset is updated whenever an external application calls the Push API to send new data. Wyn Enterprise gives you the edge by providing Push Datasets and the required APIs for pushing data to these Datasets.

In this article, we’re going to take a deep dive into implementing these APIs to collect real-time data from the GPVS systems. GPVS-Faults data can be used to design/validate/compare various algorithms of fault detection/ diagnosis/ classification for PV system protection and reactive maintenance.

Push Dataset vs Streaming Dataset

Push Datasets are different from Streaming Datasets with respect to the retention time of the data. Streaming Datasets are stored for a short duration of time (30 seconds – 60 min) in Wyn Enterprise, for real-time data analysis. Data gets cleared automatically from Streaming Datasets based on the retention time. On the other hand, with Push Datasets, data is retained in a database until cleared. Streaming Datasets and Push Datasets can both be used to create Dashboards and Reports in Wyn Enterprise.

We’re going to look at the following APIs of Push Dataset for the purpose of this article :

- Push Data

- Clean Data

Create a Push Dataset in Wyn Enterprise

Before we dive into the APIs, let’s look at how we create a Push Dataset in Wyn Enterprise.

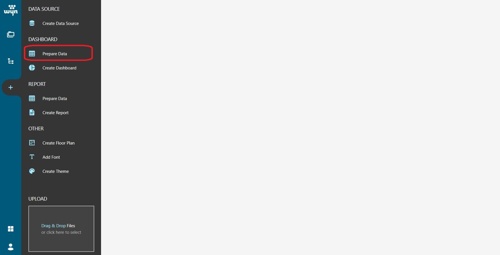

1. Go to the Resource portal and click ‘Prepare Data’

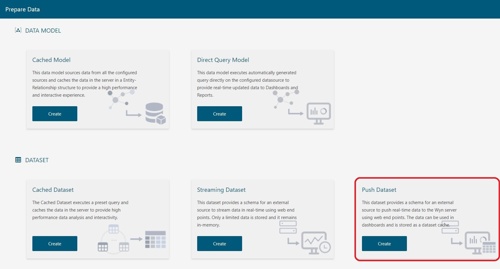

2. Select ‘Push Dataset’ as the type of Dataset to create

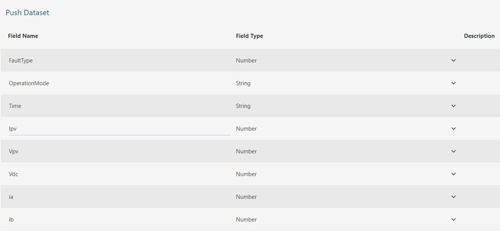

3. Add fields in the Push Dataset

4. Add a PushDataToken to be used for validation, when pushing data to the Dataset

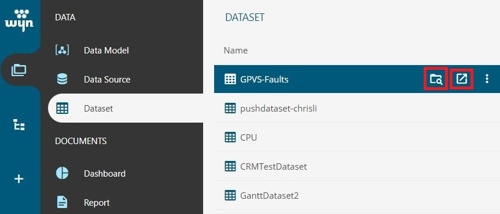

5. Click Save and give it a name (e.g. GPVS-Faults).

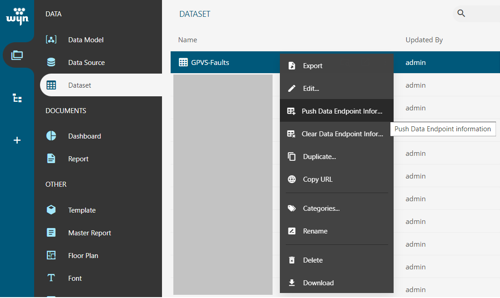

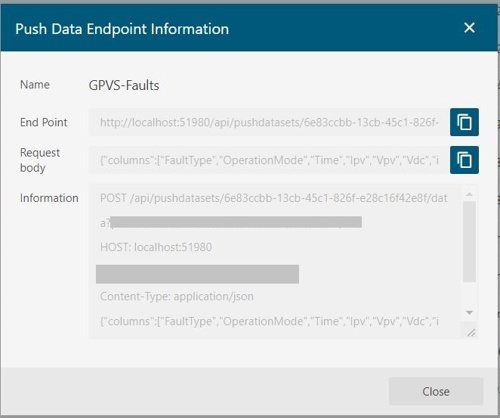

6. Select ‘Push Data Endpoint Information’ from Dataset options

7. Copy the EndPoint information from the dialog to use in your application

Create NodeJS application

To use the APIs, we’re going to create a NodeJS application that will push data from the GPVS systems to the Push Dataset. You can create one using VisualStudio Code or any other IDE of your choice.

For our application, we’re going to install two additional packages:

-axios (Call the Push Dataset APIs)

-fs (Read & Write data from/to config file)

Add a file named app.js (or you can choose another name).

Now that we have the application set up, we can use the APIs to Push Dataset.

Populate Push Dataset

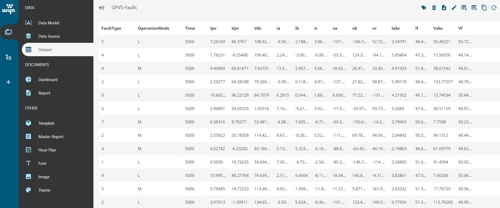

Once we have the Push Dataset set up on the Wyn server, we can push data to it using the Push Data API (Endpoint copied in Step 6 earlier). The GPVS-Faults data contains the following columns:

FaultType: (0-7), 0 represents fault-free operation and 1-7 represent the type of fault

OperationMode: (‘L’,’M’),

‘L’ is Limited power mode (IPPT)

‘M’ is Maximum power mode (MPPT)

Time: Time in seconds, average sampling T_s=5000ms.

Ipv: PV array current measurement.

Vpv: PV array voltage measurement.

Vdc: DC voltage measurement.

ia, ib, ic: 3-Phase current measurements.

va, vb, vc: 3-Phase voltage measurements.

Iabc: Current magnitude.

If: Current frequency.

Vabc: Voltage magnitude.

Vf: Voltage frequency.

In our application, we’re going to randomly generate the values for these columns and push it to the Push Dataset.

PushData API structure:

- URL: api/pushdatasets/{datasetId}/data

- Method: POST

- Authorization: AllowAnonymous

- Request parameters:

| Parameter Name | Description |

| Dataset Id | Dataset Id |

| Rows | The rows list of push datasets. |

| PushDataToken | The push data token of the dataset, used for validation when inserting data. |

| Overwrite | It represents whether the write mode is overwritten. |

| Columns | The column list of push datasets. It contains AddIndex, Name, Type, FieldDescription, DbColumnName. |

Example:

axios

.post(wynUrl + "/api/pushdatasets/" + datasetId + "/data?PushDataToken=" + pushDataToken, {

"columns": cols,

"rows": rows,

"overwrite": false

}).then(res => {

console.log(res);

})

.catch(error => {

console.error(error);

});Clear Push Dataset

With a huge amount of data being pushed to the Dataset from various systems, it is but obvious to clean the data to prevent overloading the server, once it has served the purpose. The Clear Data API comes in handy for this purpose.

ClearData API structure:

- URL: api/pushdatasets/{datasetId}/data

- Method: DELETE

- Authorization: AllowAnonymous

- Request parameters:

| Parameter Name | Description |

| DatasetId | Dataset Id |

| ColumnName | The column in where condition. |

| Option | The option in where condition. |

| Value | The value in where condition. |

| PushDataToken | The push data token of the dataset, used for validation when inserting data. |

The Clean Data API supports only one condition (Field) at a time.

- Response Body:

| Name | Description |

| Success | Whether successful or not |

| Message | The error message |

Example:

var data = {

"DatasetId": datasetId,

"ColumnName": "Time",

"Option": "=",

"Value": "5000",

"PushDataToken": pushDataToken

};

var axiosConfig = {

method: 'delete',

url: wynUrl + "/api/pushdatasets/" + datasetId + "/data?PushDataToken=" + pushDataToken,

headers: {

'Content-Type': 'application/json'

},

data: JSON.stringify(data)

};

axios(axiosConfig)

.then(res => {

console.log(`statusCode: ${res.status}`)

console.log(res.data);

})

.catch(error => {

console.error(error);

});Run the application

Now that we have the APIs added in our application, it is time to run it. Download the complete application from github. It contains three projects (PushDataset, PushDatasetServiceWindows and PushDatasetServiceLinux).

PushDataset – Contains the APIs and logic for pushing data to the Push Dataset every 5 seconds and clearing data every 24hrs.

PushDatasetService – Deploy/install the above app as a service on Windows.

PushDatasetServiceLinux – Deploy/install the above app as a service on Linux.

Steps to run the application:

- Open the PushDataset application folder and navigate to the src folder.

- Open config.json file and enter the values for ‘WynUrl’, ‘DatasetId’ and ‘PushDataToken’ attributes and save it.

- Open command prompt (or terminal), navigate to your application folder and run the following command:

npm install

npm run start

The application will run and data will start getting pushed to the ‘GPVS-Faults’ Push Dataset.

Steps to deploy the application as a service:

To deploy the application as a service, which runs in the background, you can use one of the following node packages:

For Windows – node windows

For Linux – node linux

- Run the following commands for the PushDataset application:

npm run build

This will bundle up the modules and pushdataset.js into a single file ‘bundle.js’ and will also copy the ‘config.json’ file to the dist folder. - Copy both these files to the dist folder in the PushDatasetService (or PushDatasetServiceLinux) application.

- Run the following command for the PushDatasetService (or PushDatasetServiceLinux) application:

npm install - Run the following command to install the service:

npm run installservice - To uninstall the service, run the following command:

npm run uninstallservice

Preview Push Dataset

You can now use the Dataset to design a Dashboard or a Report for analysis.

Conclusion

Push Datasets are increasingly being implemented in various industries and applications for better real-time analysis and business intelligence. They have become popular for stock analysis, fault detection and even forming marketing strategies.

Understand the Story Behind Your Data

Wyn is a web-based BI and data analytics platform that provides greater insight into your data.

Wyn offers built-in tools for report and dashboard creation, data governance, security integration, embedded BI, automated document distribution, and a business-user-friendly interface for self-service business intelligence.